MVTN: Multi-View Transformation Network for 3D Shape Recognition

MVTN: Multi-View Transformation Network for 3D Shape Recognition

Abstract

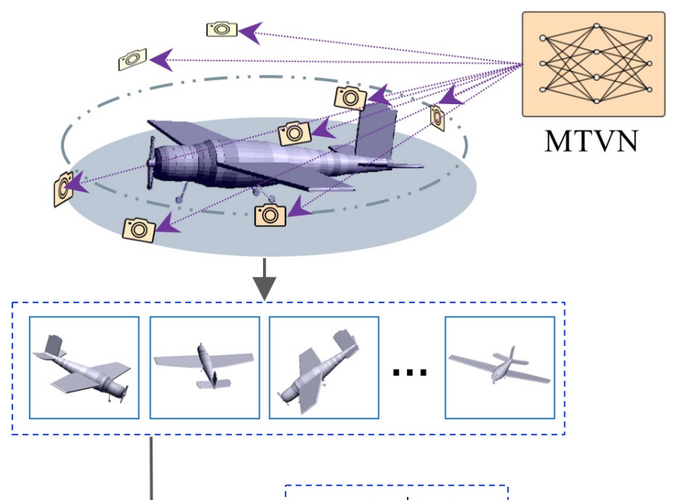

Multi-view projection methods have shown the capability to reach state-of-the-art performance on 3D shape recognition. Most advances in multi-view representation focus on pooling techniques that learn to aggregate information from the different views, which tend to be heuristically set and fixed for all shapes. To circumvent the lack of dynamism of current multi-view methods, we propose to learn those viewpoints. In particular, we introduce a Multi-View Transformation Network (MVTN) that regresses optimal viewpoints for 3D shape recognition. By leveraging advances in differentiable rendering, our MVTN is trained end-to-end with any multi-view network and optimized for 3D shape classification. We show that MVTN can be seamlessly integrated into various multi-view approaches to exhibit clear performance gains in the tasks of 3D shape classification and shape retrieval without any extra training supervision. Furthermore, our MVTN improves multi-view networks to achieve state-of-the-art performance in rotation robustness and in object shape retrieval on ModelNet40.